The evolution of enterprise technology is marked by a shift away from traditional software structures toward AI-Native Software, systems fundamentally built with artificial intelligence at their core. This method relies on model-driven logic as the core of its application design, marking a shift away from traditional systems built purely on rule-based programming. Companies like Jasper and Copy.ai are great examples of truly AI-native products — they use advanced artificial intelligence to handle complete processes, such as producing high-quality written content from start to finish.

The distinction between AI-Native Software and older “AI-enabled” systems is rooted deeply in architectural intent. AI-enabled systems are typically developed by retrofitting AI capabilities onto existing infrastructure, often constrained by legacy systems that were not designed for specialized computational needs. This technological debt can lead to suboptimal performance because the systems fail to utilize advanced hardware efficiently.

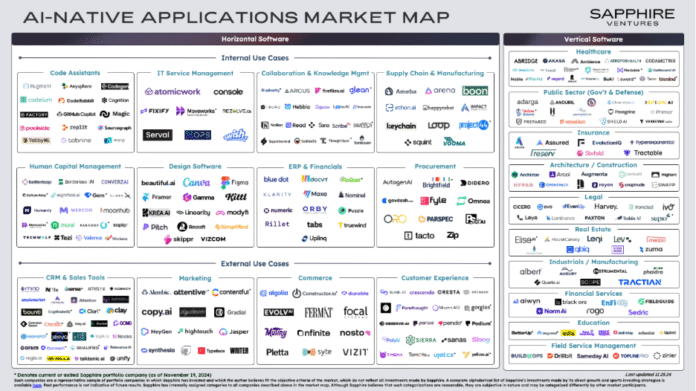

Source: sapphireventures

Most older systems weren’t really built for the kind of nonstop learning and real-time decision-making that today’s AI tools depend on. AI-native platforms, on the other hand, are created from the start with that goal in mind. They use flexible setups that combine powerful chips, such as GPUs and TPUs, with innovative software that can adjust its own behavior as conditions change.

What used to be ordinary technical debt in legacy systems now ends up slowing progress and holding back innovation.

AI-native applications also focus on maintaining a continuous flow of data. They utilize event-driven architectures, change data capture (CDC), and streaming transformations to remain responsive in real-time.

Because of this built-in ability to learn and adapt, the core logic of these systems is constantly evolving—a sharp contrast to traditional software, which remains static mainly after deployment.

Architectural Comparison: AI-Enabled Versus AI-Native Software

| Feature | AI-Enabled (Embedded) | AI-Native (Foundational) |

| Architectural Intent | Retrofit AI onto existing, rule-based infrastructure. | Built from inception with model-driven logic at the core. |

| Data Processing | Typically, batch processing and periodic data transfers. | Event-driven architecture; real-time inference and streaming. |

| Infrastructure | Standardized servers; often resource-constrained. | Adaptive infrastructure, utilizing specialized hardware (GPUs/TPUs) efficiently. |

| Adaptation | Periodic retraining requires manual intervention. | Continuous learning, adaptation, and self-improvement — built in |

Architecture for Autonomy: The Self-Evolving Stack

I’ve seen a fundamental shift in the way new systems behave. What used to be static code now seems almost alive. The change originates from autonomous Agentic AI solutions—programs that make their own decisions and learn as they operate. They watch, react, and adjust without someone standing over their shoulder. The real magic happens inside the evolution engine. It sits next to the live app, noticing patterns, timing, and even the odd hiccup.

This dedicated layer operates alongside a running application, constantly overseeing its execution. The evolution engine uses models of the current system configuration and a reusable knowledge base to automatically decide the appropriate moment and method for system evolution or reconfiguration. This mechanism is crucial for the dynamic adaptation of a software system’s structure and behavior in response to changing conditions, a concept known as adaptive architecture.

Source: ericsson

The pairing of an evolution engine with AI-managed infrastructure signals more than a technical upgrade—it’s a turning point from standard automation toward genuinely autonomous operations. Infrastructure is no longer just a collection of static systems. It’s starting to act with intent, learning how to build, adapt, repair, and optimize itself without waiting for manual input.

You can think of agentic orchestration as the control room behind modern intelligent systems. It keeps a whole network of AI agents, automation bots, and people working together, rather than apart. The goal isn’t just efficiency—it’s coordination. Each piece is aware of what the others are doing, which helps the system function as a cohesive unit.

These orchestration layers also tie together everything that already exists in an organization: data pipelines, analytics engines, and all those specialized platforms built over time. By linking them, operations become easier to scale, and resources can shift naturally as priorities change throughout the day.

When companies start adding more autonomous agents—often developed by different vendors—the problem becomes more complex. Integration and oversight quickly become critical. That’s where Agent-to-Agent (A2A) frameworks come in. They provide these agents with a secure and structured way to communicate, while ensuring compliance with regulations. In fields such as healthcare or finance, where rules surrounding data are strict, this structure enables collaboration without compromising compliance.

Operationalizing Continuous Adaptation Through MLOps

Continuous adaptation is impossible without rigorous Machine Learning Operations (MLOps) practices, which streamline the entire ML lifecycle—from continuous integration (CI) to continuous delivery (CD). The inherently dynamic nature of AI-Native Software elevates MLOps from an organizational best practice to a core operational necessity.

New patterns emerge, accuracy fades, and if the issue isn’t caught early, performance drops fast—sometimes leading to serious mistakes in critical applications.

Modern teams often rely on self-adaptive testing frameworks to maintain the stability of AI systems over time. These frameworks don’t just run tests—they learn. By tracking how data shifts, how models age, and where pipelines start to wobble, they can flag problems early through their built-in feedback loops.

At the center of this setup is what’s called the Adaptive Control Engine. It utilizes reinforcement learning to determine when a model should be retrained or revalidated, making that decision automatically rather than waiting for a manual decision. This mechanism refines validation thresholds dynamically based on performance monitoring in production.

Statistical validation layers are crucial for detecting technical drift. In practice, teams rely on a few simple but powerful checks to see when data starts to behave differently from what the model expects. Tests such as the Kolmogorov–Smirnov or Jensen–Shannon divergence compare today’s data with the baseline used during training. When the numbers drift, the system notices fast. That quick detection helps engineers keep accuracy steady and fix issues before they ripple through production.

The framework also relies on a knowledge graph that tracks the connections between datasets, models, and metrics. Because everything is linked, it’s easier to retrace how a prediction was made when auditors or regulators ask. The exact structure maintains consistency over time and helps people trust automation that continually learns and adapts on its own.

The Market Impact And Value Capture (2026 Projections)

The emergence of custom Generative AI and Custom agentic AI solutions represents a foundational shift, anticipated to be even more disruptive than the previous software-as-a-service (SaaS) revolution. People in the industry are already discussing the sheer scale of what’s to come. Some forecasts suggest that generative AI could add over $4.4 trillion annually to the global economy. It’s a massive number, and software companies are in the best position to capture a significant share of it, as they’re the ones actually integrating AI into everyday products.

You can see that momentum showing up in spending trends as well. Global IT budgets are projected to reach approximately $6.08 trillion by 2026, representing a 10% increase from 2025. Most of that new money is going straight into AI infrastructure—data centers, chips, and tools to keep these models running.

Software alone is expected to grow at the fastest rate. By 2026, it could be worth around $1.43 trillion, representing a roughly 15% increase from the previous year. That growth is being driven not just by demand, but also by the rising cost of integrating AI features across every type of platform and service.

Here’s the Worldwide IT Spending Forecast for 2026

| Segment | 2026 Spending (USD Millions) | 2026 Growth Rate (%) |

| Software | 1,433,037 | 15.2 |

| Data Center Systems | 582,446 | 19.0 |

| IT Services | 1,869,269 | 8.7 |

| Overall IT Spending | 6,084,085 | 9.8 |

For established companies, adopting AI-native software is not merely an optimization exercise, but a critical defensive strategy. New entrants are already using these architectures to push the limits of speed, scale, and adaptability. At the same time, major AI vendors are embedding their own tools directly into everyday workflows, effectively stepping into the same space once dominated by traditional SaaS providers. The result is growing competitive pressure on incumbents who move too slowly.

AI-native products stand out because they combine deep domain know-how with automation that covers the whole workflow. Take healthcare as an example. These tools are already reducing time and cost in areas such as clinical documentation—roughly a $600 million market—and in billing and coding automation, which presents an additional $450 million or so in opportunity. Those are clear, measurable returns.

On the consumer side, the same technology drives an entirely different kind of advantage: personalization. AI-native applications learn from moment-to-moment data — from location and browsing activity to timing and context — to shape each user’s experience. This responsiveness is essential, as 71% of consumers report they expect companies to deliver personalized content.

Navigating The Challenges: Governance And Trust

The increased autonomy inherent in self-evolving applications introduces a complex set of ethical and operational challenges. The U.S. Department of Homeland Security has even identified “autonomy” as a risk for key sectors, such as finance and healthcare, where a single mistake can have far-reaching consequences.

The biggest risk isn’t only failure—it’s surprise. Things break, or algorithms take actions no one intended. That’s why safety features can’t wait until the end; they have to grow alongside the technology itself. Keeping human values in the loop—clear governance, transparency, and sensible energy use—is what makes powerful systems dependable ones.

Public opinion is another story. People still hesitate when machines start deciding issues that affect lives, from law enforcement to public services. Worries about jobs, misinformation, and the lack of empathy in automated choices haven’t gone away. Until those fears ease, society is likely to move slowly toward full trust in autonomy.

The complexity of autonomously evolving systems introduces an accountability paradox. If adaptive AI modifies its own code or configuration , explaining the causality of an error requires the system’s design to embed features for explainability and fairness. This is where architectural features like Knowledge Graph Integration (maintaining an auditable trail of decisions and data relationships) become essential for regulatory compliance.

To maintain trust and mitigate risk, organizations may be compelled to implement human–AI hybrid solutions rather than fully autonomous systems. The architecture must accommodate rigorous governance frameworks, including defined human approval checkpoints and safety measures like tracing and replay capabilities within production agent paths. This structural requirement prioritizes system resilience and robustness over pure speed, ensuring autonomous operations remain transparent and accountable.

Conclusion: Embracing The Adaptive Imperative

AI-Native Software represents the inevitable trajectory for enterprise systems, moving away from static, rule-based infrastructure toward architectures defined by continuous adaptation and model-driven autonomy. The core technical differentiator lies in the integration of specialized adaptive infrastructure, event-driven data flows, and autonomous mechanisms like the evolution engine and agentic orchestration.

Operationalizing this wave requires strict adherence to self-adaptive MLOps practices, which utilize sophisticated statistical frameworks, such as the application of the Kolmogorov–Smirnov test for drift detection, coupled with Adaptive Control Engines that use reinforcement learning to ensure continuous model accuracy and reliability. This robust governance is non-negotiable for systems that learn and reconfigure autonomously.

IT spending worldwide is closing in on the $6 trillion mark by 2026. That kind of growth doesn’t just expand the market; it shakes it up. For long-time software players, moving toward an AI-native architecture has become a survival strategy.

It’s about staying relevant while the industry tilts toward systems that deliver full, end-to-end results instead of just individual tools. The real advantage now comes from showing clear outcomes for customers—efficiency gained, time saved, and measurable progress.